Low-Latency Matching External Records

Given a small number of incoming or external records (up to a thousand), identify matches between the incoming records and existing records or records and clusters.

LLM is an API-only feature that provides fast match suggestions for a small batch of records. Send a small number of records (<10,000) to Tamr Core's match API, and Tamr Core quickly returns the most likely match(es) for that record, without the need to rerun pair generation and clustering jobs. Tamr Core uses minimal computational resources during this process: even if the number of records stored in Tamr Core is large (for example, tens of millions), Tamr Core is able to return an answer within seconds.

Note: The low-latency matching service is available on the TAMR_MATCH_BIND_PORT (9170 by default), not the TAMR_UNIFY_BIND_PORT (9100 by default). Interactive Swagger API documentation for low-latency match endpoints is available at http://<tamr_ip>:9170/docs. (See the Configuration Variable Reference for information about changing the match service port.) The endpoints for Query LLM Status, Query Last LLM Update, and Perform LLM Match are different than the endpoint for Update LLM Data.

Important: This feature is in limited release. Before using the low-latency match (LLM) feature, contact Tamr Support at help@tamr.com to discuss your use case and for configuration assistance.

Before You Begin

Verify the following before completing the procedures in this topic:

- At least one mastering project exists (Creating a Project).

- The project includes at least one dataset, and the dataset has been schema mapped to the project's unified dataset (Adding a Dataset).

- You have run the Generate Record Pairs and Update Results jobs, or have imported a mastering model (Importing a Mastering Model).

- Input records include non-null data attributes used in the blocking model, and have been pre-processed in the same way that data is processed by transformations in the mastering project.

- The port for the low-latency matching service (default 9170) is open to allow inbound access for your application.

Typically, after you make changes that affect clusters, you publish them and then use a matching service. If there are no changes (meaning that clusters have not been published since the last time you used a matching service), -1 is returned.

Using Low-Latency Match

To use LLM, you prepare the project and then match.

Preparing Low-Latency Match

Prepare a project to use LLM.

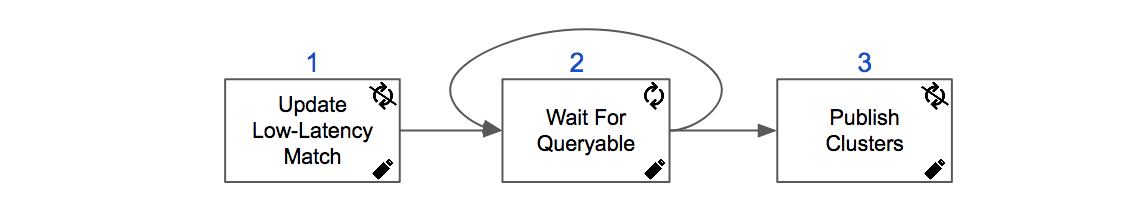

To prepare a mastering project for low-latency matching:

- Update LLM: POST /v1/projects/{project}:updateLLM (port 9100 by default)

- Wait For Queryable: GET /v1/projects/{project}:isQueryable (port 9170 by default)

Poll the status of the update job submitted to prepare the project until the responsetrueis received.

To publish clusters:

3. Publish Clusters: POST /v1/projects/{project}/allPublishedClusterIds:refresh (port 9170 by default)

4. Wait For Queryable: GET /v1/projects/{project}:isQueryable (port 9170 by default)

Poll the status of the publish job until the response true is received.

Low-Latency Matching

To get match/no-match responses for incoming external records:

Low-Latency Match: POST v1/projects/{project}:matchRecords (port 9170 by default)

This endpoint pairs each incoming, streaming query record that passes the blocking model with a record in an existing mastering project (the {project}) and returns the probability of that match in a response stream.

To get cluster match probabilities for incoming external records:

Low-Latency Match: POST /v1/projects/{project}:matchClusters (port 9170 by default)

This endpoint compares incoming, streaming query records that pass the blocking model of an existing mastering project (the {project}) to the existing clusters, and returns the cluster with the highest probability as a match.

Updated almost 2 years ago