Curating Project Jobs and Viewing Metrics

Curate pairs with assignments and responses.

Assigning and Curating Record Pairs

Both curators and verifiers assign and verify pairs in the mastering project workflow. See the following pages first:

Curators (and admins) decide when to run the system jobs that update grouping keys and the machine learning model.

Applying Feedback and Updating Mastering Results

The Apply Feedback and Update Results job:

- Trains the model using the latest verified pair feedback.

- Applies the model to all candidate pairs (replaces system pair suggestions with new ones).

- Clusters using the latest record cluster feedback.

- Assigns persistent IDs to clusters on initial run only (publishes).

- Generates new high-impact pairs.

Tip: It is a good practice to label at least 50 pairs, including both matching and non-matching pairs if possible, before first initiating this job. See Viewing and Verifying Pairs.

To apply feedback and update mastering results:

- In a mastering project, select the Pairs page.

- Select Apply Feedback and update results. See Monitoring Job Status.

Updating Mastering Results

The Update Results job:

- Applies the model to all candidate pairs (replaces system pair suggestions with new ones).

- Clusters using the latest record cluster feedback.

- Assigns persistent IDs to clusters on initial run only (publishes).

Tip: The Update results job can only be run after at least one Apply feedback and update results job is complete.

To update mastering results:

- In a mastering project, select the Pairs page.

- Select the dropdown arrow next to Apply feedback and update results, and then select Update results only. See Monitoring Job Status.

Regenerating Pairs

In projects where you enable learned pairs, the model learns more about how to label pairs from your cluster feedback. You must regenerate pairs to begin the process of Tamr Core learning pairs based on your cluster feedback. See Learned pairs.

In projects where you enable the learned pairs feature, Tamr recommends that curators audit learned pairs on the Pairs page by filtering to Inferred and Learned Pairs.

If you change the grouping keys for your project after label verification has begun, Tamr Core uses record grouping history to infer labels for newly-formed pairs. This filter also includes pairs that have an automatically-applied inferred label.

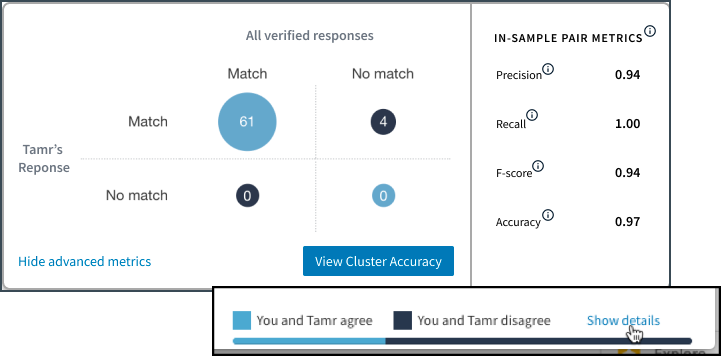

Viewing In-Sample Pair Metrics

Each time you apply feedback and update results, the model recomputes the following performance metrics by comparing the model predictions with verified feedback from experts:

- Accuracy: The ratio of correct Tamr Core predictions to all pairs with expert feedback; the overall correctness of suggestions.

- Precision: The ratio of correct matches to all matches predicted by the model. This is a measure of the effectiveness of finding true matching pairs, measured by (True Positives)/(True Positives + False Positives).

- Recall: The ratio of correct Tamr matching pairs to all matching pairs identified by expert feedback. This is a measure of how well Tamr Core does at not missing any matching pairs, measured by (True Positives)/(True Positives + False Negatives).

- F score: The harmonic mean of the precision and recall, where 1 is perfect precision and recall.

Note: Checking these metrics for an upward trend as you iterate can help you evaluate the effect of the changes you make to your data, blocking model, or pairs. These metrics compare system-generated pair suggestions to the verified responses from experts. They do not indicate how accurate the model is for all pairs. See the precision and recall metrics for clusters for metrics computed for test records.

For more information about these metrics, see Precision and Recall

To view a confusion matrix and in-sample pair metrics:

- In a mastering project, select the Pairs page.

- In the bottom right corner choose Show details. The confusion matrix opens with the current values.

Note: This confusion matrix can help you determine if Tamr mistakes are biased towards matching or non-matching labels.

A confusion matrix with metrics based on labeled pairs.

Tip: To filter pairs, you can click on each of the quadrants in this visualization.

3. To show the computed values for the in-sample pair metrics, select View advanced metrics. A panel with these values appears to the right of the matrix (shown above).

4. If cluster metrics are available for your mastering project, the View Cluster Accuracy option opens the precision and recall metrics for clusters.

Updated about 2 years ago