Categorization Projects

A categorization project identifies each record in a unified dataset with its single best match in a systematically-organized taxonomy.

Purpose and Overview

Categorization projects help you realize value from organizing equivalent data records into distinct, unique categories. An example use is spend management, where categorizing records can help you gain insights into the volume of spend on specific parts or types of parts, across suppliers or from the same supplier.

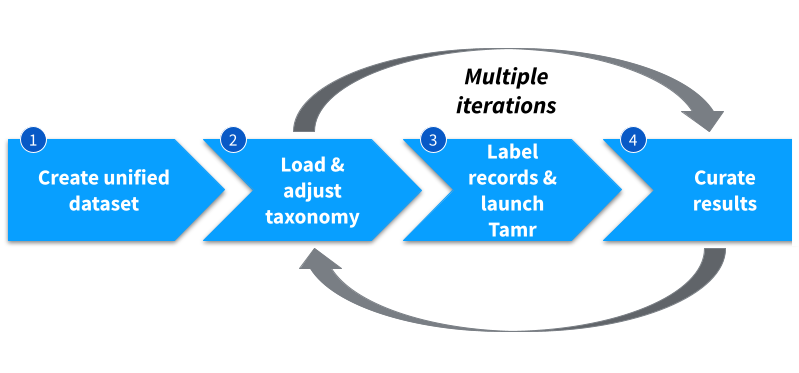

Categorization project workflow.

Categorization Workflow

The categorization workflow consists of the following steps:

- Create a unified dataset.

- Load a taxonomy and optionally adjust it based on reviewer feedback. (Steps 1 and 2 can be completed in any order.)

- Label an initial set of records then launch the machine learning model for categorization.

- Review the categories suggested by the model, provide feedback, and re-launch the model to improve record categorization.

Step 1: Create a Unified Dataset for the Categorization Project

The initial stage of a categorization project is similar to that of a schema mapping project: an admin or author creates the project and uploads one or more input datasets. As in a schema mapping project, curators then map attributes in the input datasets to attributes in the unified schema. See Creating the Unified Dataset for Categorization.

Curators then complete additional configuration in the unified schema that is specific to categorization projects:

- Specify the tokenizers for machine learning models to use when comparing text values.

- Optionally, identify a numeric unified attribute as the "spend" attribute. Tamr Core uses this attribute to compute a total amount per category which you can use to sort project data.

- Optionally, set up transformations for the data in the unified dataset.

Step 2: Load and Adjust the Taxonomy

After creating the unified dataset, a curator loads an existing taxonomy file into the project. Curators can make additions and other changes to adjust the taxonomy and adapt it to meet your organization's needs.

As curators iteratively make changes to the taxonomy to incorporate feedback, the machine learning model suggests categories for records with increasing confidence. See Navigating a Taxonomy.

Step 3: Train and Launch the Model to Put Records into Categories

In the next step, you begin labeling a small set of records with the appropriate "node" in the taxonomy. Verifiers and curators provide this initial training by finding and labeling at least one record for every node in the taxonomy. See Training Tamr Core to Categorize Records.

When curators apply feedback and update results, the machine learning model uses this representative sample to identify similarities between values that are now associated with each category of the taxonomy and values in other records to suggest categories. See Updating Categorization Results.

Step 4: Include Reviewer Feedback and Verify the Results

After curators and verifiers complete initial training and the machine learning model generates suggested categories, curators and verifiers assign records to one or more experts with the reviewer (or other) role for their feedback. See Assigning Records in Categorization Projects.

Reviewers upvote or downvote the categories proposed by the model or by other team members, and can propose different categories to label records more accurately. See Reviewing Categorizations. To make this effort more efficient, Tamr Core identifies certain suggestions as high-impact. For example, if the model has low confidence regarding whether or not a record pertaining to “1 inch turbine bolts” belongs to the “Bolt” category, Tamr Core marks that record as high-impact so that a reviewer can provide feedback. Team members with any user role can upvote or downvote labels, and remember you can utilize whichever roles make the most sense to your organization. See Categorizing Records.

Work done by reviewers does not change the machine learning model until after a verifier or curator verifies the categorizations for one or more records and a curator updates the model with the results. Tamr Core adds the verified expert feedback to its model, improving its accuracy and enhancing future automation for categorizing vast numbers of records with high accuracy. See Verifying Record Categorizations (verifiers) and Updating Categorization Results (curators).

For more information, see User Roles and Tamr Core Documentation.

Updated over 2 years ago