Reviewing Pairs

Review pairs in a mastering project by filtering to pairs of interest and labeling pairs that do and do not match.

Applying Your Expertise in a Mastering Project

Datasets can include multiple records that refer to the same entity. Mastering projects "deduplicate" a dataset by identifying records that do, and do not, represent the same entity.

Interpreting Tamr Suggestions

As a reviewer, you are assigned a sampling of paired records, or paired record groups, in a dataset. When you begin your review, Tamr Core has already paired up records and predicted whether each pair is a Match ![]() or No match

or No match ![]() . You review these predictions and use your expertise to determine whether they are correct, and provide a match or no match label to reflect your input.

. You review these predictions and use your expertise to determine whether they are correct, and provide a match or no match label to reflect your input.

You can also comment on pairs to provide context or to explain your response.

Curators and verifiers decide between conflicting responses. By providing labels and adding comments, reviewers give the curators insight into their responses, and ultimately help them make the most informed decisions.

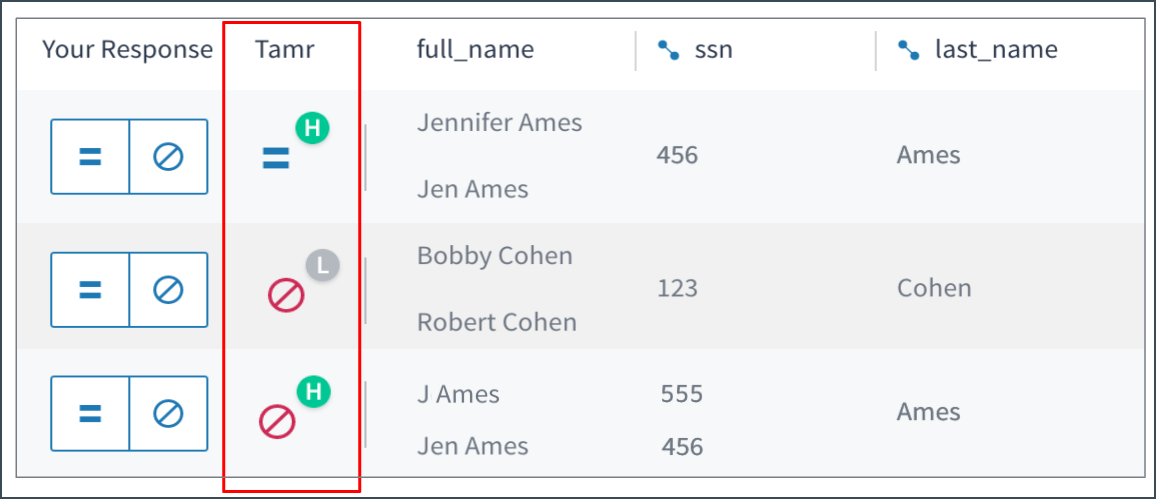

In a mastering project, the Tamr column on the Pairs page shows the Match ![]() or No match

or No match ![]() suggestion along with an indication of the level of confidence for the suggestion: High

suggestion along with an indication of the level of confidence for the suggestion: High ![]() , Medium

, Medium ![]() , or Low

, or Low ![]() . Confidence is a loose measure of how many of Tamr Core's internal classifiers agree on a label. Low-confidence labels often need to be examined by you or a colleague, whereas high-confidence pairs may be streamlined directly through a data pipeline.

. Confidence is a loose measure of how many of Tamr Core's internal classifiers agree on a label. Low-confidence labels often need to be examined by you or a colleague, whereas high-confidence pairs may be streamlined directly through a data pipeline.

Tamr suggestions for pairs of records, with levels of confidence.

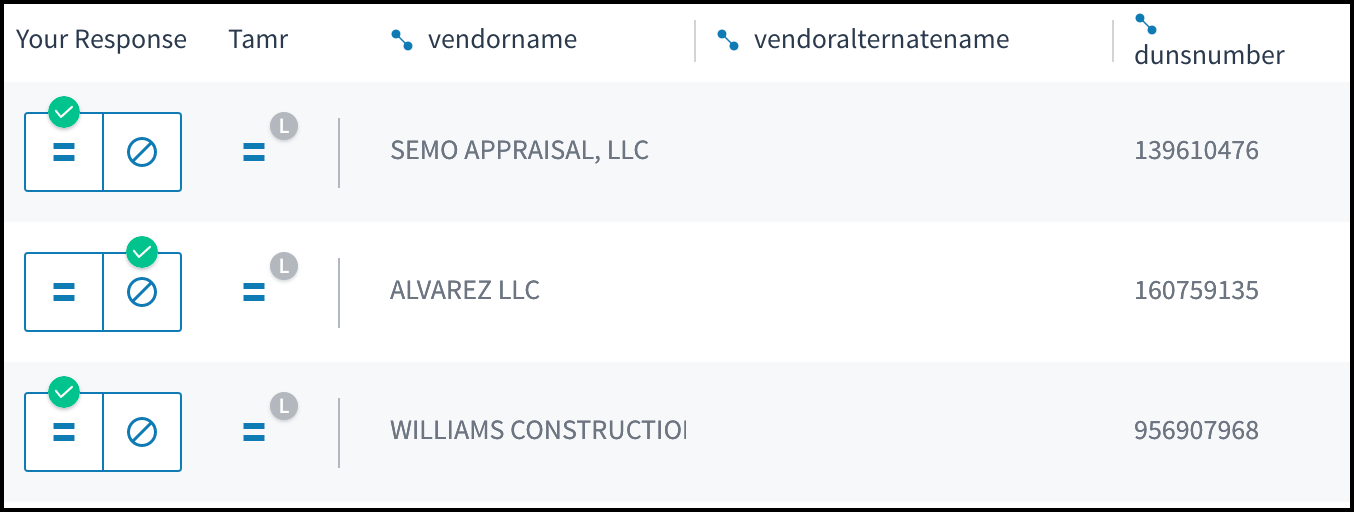

Interpreting Checkmarks for Pairs

For some pairs, the Your Response column might already include a check mark indicator over the icons, as in the following example. These check marks alert you to labels that one (or more) of your colleagues have already applied to the pair. You can still label these pairs with your own match or no match evaluation.

The green check above the match (equals sign) or no match (no symbol) icons shows another team member's response.

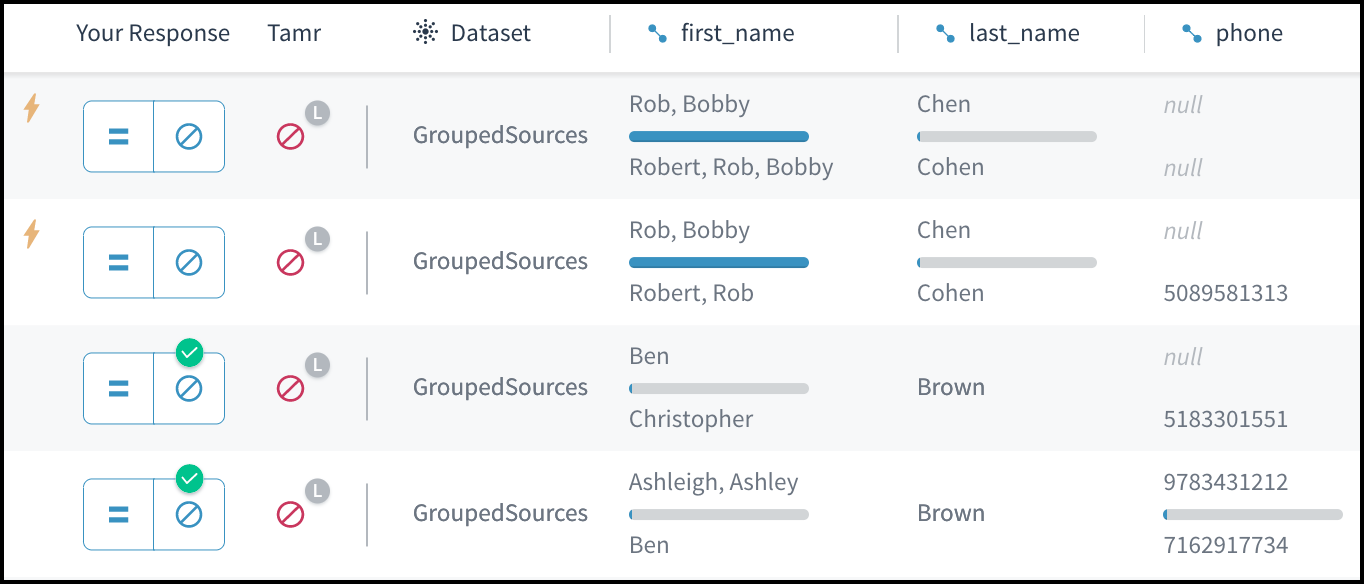

Interpreting Values for Record Groups

In mastering projects that include the record grouping stage, records that have identical values for specific key attributes, such as a government-issued identification number, are grouped together as obvious duplicates. Groups that include more than one record can show multiple values for the other, non-key attributes. Records that have one or more unique values for the key attributes are placed in "singleton" groups. Specific input datasets can be excluded from this process, which leaves their records ungrouped.

In a project that groups records, you review the predictions that Tamr Core makes about a pair of groups or records. In the following example, several pairs of groups appear. In the first two pairs, multiple values from the records in the group appear for the first_name attribute.

Grouped records can show aggregated values for some attributes.

One of the options for record grouping is to group records with identical values across input datasets. When this option is selected, the Dataset is “GroupedSources”. Otherwise, the name of the source dataset for the group’s records appears.

Options for Working with Pairs

When you label pairs, you use your expertise to indicate whether the two records or groups do or do not represent the same entity. When reviewing pairs, you can do the following:

- View all of the data values in each record or group in a pair to get a more detailed, side-by-side view. See Viewing Pairs Side-By-Side.

- Add comments to pairs to explain your choice or to provide or request additional information. See Adding Comments to a Pair.

- Reduce the number of pairs to those that meet certain criteria. See Filtering Pairs.

For information on configuring the display of tabular data, or searching for data, see Navigating Data. For more information on how curators verify pairs, see Viewing and Verifying Pairs.

Pair Review Guidelines

Follow these pair review guidelines to most effectively train the machine learning model. Provide a representative initial sample of labeled records so that Tamr Core can do the heavy lifting for you.

Prioritize High-Impact Pairs

As a reviewer, your job to go through your assigned pairs and tell Tamr Core when it is both right and wrong. Tamr Core helps you do this by telling you which pairs it is least sure about. These are called High-impact pairs ![]() , indicated by the lightning bolt to the left of the pair. Provide your feedback on these pairs first, as they have the biggest impact on helping Tamr Core learn about your data.

, indicated by the lightning bolt to the left of the pair. Provide your feedback on these pairs first, as they have the biggest impact on helping Tamr Core learn about your data.

By default, Tamr Core sorts high-impact pairs to the top of the first page of results; if they don't appear, you can filter to them. Open the filter panel by choosing Filter ![]() , and then check the box to show only high-impact pairs.

, and then check the box to show only high-impact pairs.

Whenever a curator updates results, Tamr Core creates new high-impact pairs. Responding to these pairs gives Tamr Core information about parts of the data where it has low confidence.

Find and Label Edge Cases

As you respond, look for records where Tamr Core has high confidence about a prediction but is wrong. If you can correct cases where Tamr Core is wrong, it learns faster. The feedback you provide by labeling these pairs is the most valuable for improving the matching model, regardless of Tamr Core's confidence level.

The navigation tools on the Pairs page help you find the most impactful pairs to review. You can filter to cases where experts and Tamr Core disagree. Study these cases to understand what Tamr Core is getting wrong. The confusion matrix (found in the bottom right corner, under Show details) can help you determine if these mistakes are biased towards match or no match labels.

Find and Correct Records that Do Not Match

Find and correct records that do not match, but seem like they should at first glance. Identify whether there is an attribute (or set of attributes), that, when different, is a very strong indicator that two records do not match.

Try the following:

- Filter to pairs identified as Matches, but have different values for these attributes.

- Respond to a dozen or so No matches.

- If the predictions do not improve after a curator updates results, respond to more edge cases.

Find and Correct Records that Do Match

Find and correct pairs that do match, but might not seem like it at first glance. Tamr Core might incorrectly identify a true match as a no match.

To correct these cases, and provide the machine learning model with valuable data, try the following:

- Filter to pairs identified as No matches, but all salient attributes match when allowing for a lower similarity on the attributes of interest.

- Respond to a dozen or so Matches.

- If the predictions do not improve after a curator updates results, respond to more edge cases.

Once you figure out the kinds of mistakes Tamr Core is making, use similarity filters to find unlabeled examples where Tamr Core is wrong in the same way.

Be Honest in Your Responses

Most importantly, be honest and thoughtful with your feedback so that Tamr Core can learn to accurately identify duplicate records. You do not need to label every pair assigned to you; if you aren't sure whether a pair is a match, don’t guess. Instead, add a comment with your concerns about the pair so that it can be assigned to another reviewer. See Adding Comments to a Pair. The quality of the model depends on the quality of the labels you provide. This means Tamr Core learns best when you do not guess about or mislabel data.

Adding Comments to Clustered Records

The final step in a mastering project is the review and verification of clusters of records. To help evaluate whether every record in a cluster does, in fact, represent the same unique entity, you might be asked to comment on records in a cluster. See Commenting on Cluster Records.

Updated about 2 years ago