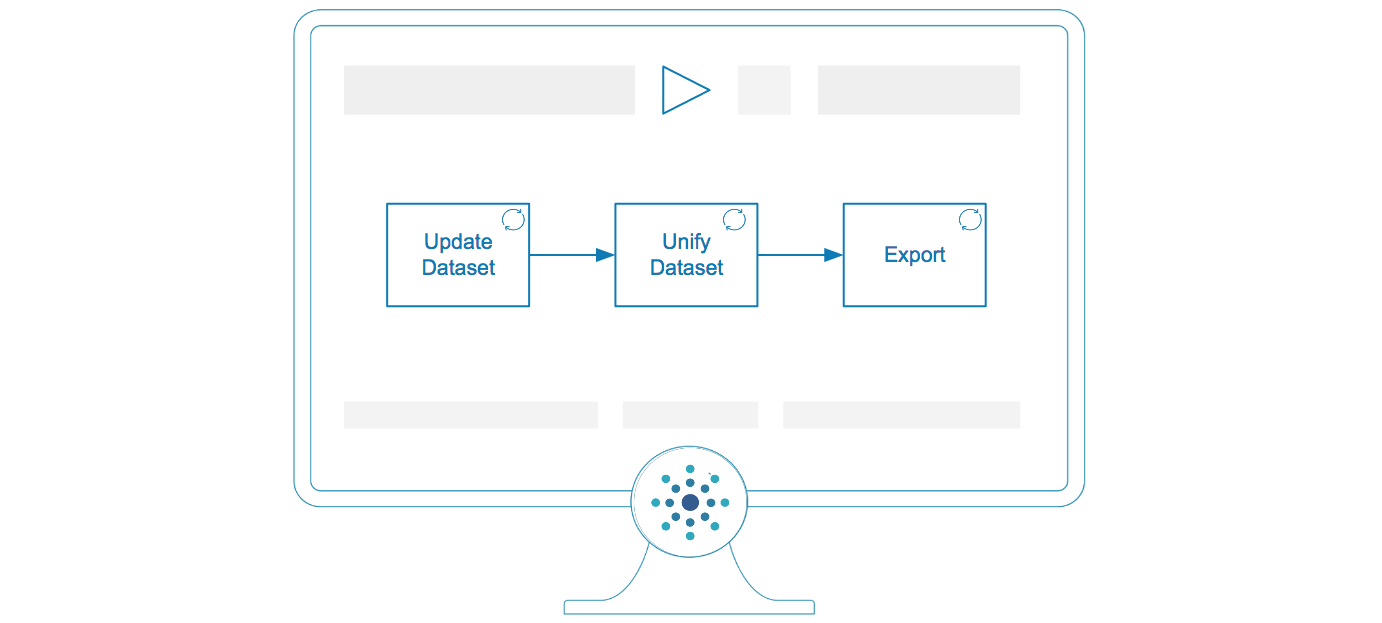

Categorization Pipeline

Run Tamr Core continuously from dataset update through categorization and export.

Automate the running of jobs and common user tasks in a Categorization project.

Categorization pipeline.

Using Tamr Toolbox to Automate the Categorization Pipeline

You can use the Tamr Toolbox to automate running jobs and common user tasks. Tamr Toolbox is a python library created to provide a simple interface for common interactions with Tamr Core and common data workflows that include Tamr Core. See the Tamr Toolbox documentation.

Before You Begin

Verify the following before completing the procedures in this topic:

- At least one Categorization project exists. See Creating a Project.

- The project includes at least one dataset, and you have performed schema mapping on the project’s unified dataset. See Adding a Dataset to a Project.

- You have run both the Update Unified Dataset and Update Categorizations jobs.

Updating the Input Datasets

Complete this procedure for each input dataset in your project.

To add an input dataset:

- Find the id of your input dataset: GET /v1/datasets

When this API is used with a filter parameter such asname==my-dataset.csv, it returns the dataset definition of the named dataset. From the API response, capture the numeric value afterdatasets/from theidof the desired dataset to use as thedatasetIdin subsequent steps. - (Optional) Delete (truncate) records in the input dataset: DELETE /v1/datasets/{datasetId}/records

Complete this step if you want to remove all records currently in your input dataset and only include source records added during the next step. Use thedatasetIdyou obtained in step 1. - Update existing records and add new records to the input dataset: POST /v1/datasets/{datasetId}:updateRecords?header=false

Update the records of the dataset{datasetId}using the commandCREATEfor new records and record updates and using the commandDELETEto delete individual records. Use thedatasetIdyou obtained in step 1.

Updating and Exporting Record Categorizations

Complete this step after updating your input datasets.

To update and export categorizations:

- Find the id of your categorization project dataset: GET /v1/projects

When this API is used with a filter parameter such asname==my-project, it returns the project definition of the named project. From the API response, capture the numeric value after theprojects/from theidto use as the project id in subsequent steps and theunifiedDatasetNameof the desired project. - Refresh unified dataset: POST /v1/projects/{project}/unifiedDataset:refresh

Update the unified dataset of the project using its project id{project}. Additionally, capture the

idfrom the response. Use the project id obtained in step 1. - Wait for operation: GET /v1/operations/{operationId}

Using the captured id from the previous step, poll the status state of the operation until status.state=SUCCEEDEDis received. - (Optional) Train categorization model: POST /v1/projects/{project}/categorizations/model:refresh

If users added manual categorizations and you would like to update the categorization model to incorporate this information, run this step. If you would prefer to use a previously trained model, skip this step. Use the project id obtained in step 1.

Additionally, capture the id of the submitted operation from the response. - (Optional) Wait for operation: GET /v1/operations/{operationId}

If you chose to complete the previous step, complete this step too. Using the captured id from the previous step, poll the status state of the operation until status.state=SUCCEEDEDis received. - Categorize records: POST /v1/projects/{project}/categorizations:refresh

Apply the categorization model for the project{project}. Additionally, capture the id of the submitted operation from the response. Use the project id obtained in step 1. - Wait for operation: GET /v1/operations/{operationId}

Using the captured id from the previous step, poll the status state of the operation until status.state=SUCCEEDEDis received. - Find the id of the output dataset: GET /v1/datasets

When this API is used with a filter parameter such asname==my-dataset.csv, it returns the

dataset definition of the named dataset. For the export dataset, find the dataset with a name

that matches<unifed_dataset_name>_classifications_with_data, using the unified dataset

name of your project found in step 1. From the API response, capture the numeric value after

datasets/from theidof the desired dataset to use as the output dataset id in subsequent

steps. - Refresh the output dataset: POST /v1/datasets/{datasetId}:refresh

To refresh the final output dataset, use the dataset id you captured in the previous step. - Wait for operation: GET /v1/operations/{operationId}

Using the captured id from the previous step, poll the status state of the operation until status.state=SUCCEEDEDis received. - Stream the records of the output dataset: GET /v1/datasets/datasetId/records

Obtain the records of the output dataset as JSON or AVRO, using the id of the output dataset.

Alternate Methods for Data Ingestion and Export

This topic describes one method of data ingestion and data export. For other methods, see Exporting a Dataset to a Local File System and Uploading a Dataset into a Project .

Updated over 1 year ago