Tamr Core AWS Network Reference Architecture

Tamr’s AWS resources must be launched into an existing Virtual Private Cloud (VPC) setup that meets the requirements described in this topic. The reference network architecture supports the Tamr Core AWS cloud-native deployment following security best practices.

You can implement the recommendations in this topic using the Tamr Terraform AWS networking module

Note: The name of the Amazon Elasticsearch Service has changed to "Amazon OpenSearch Service". See Amazon's blog post for more information about this change.

AWS Network Reference Architecture Overview

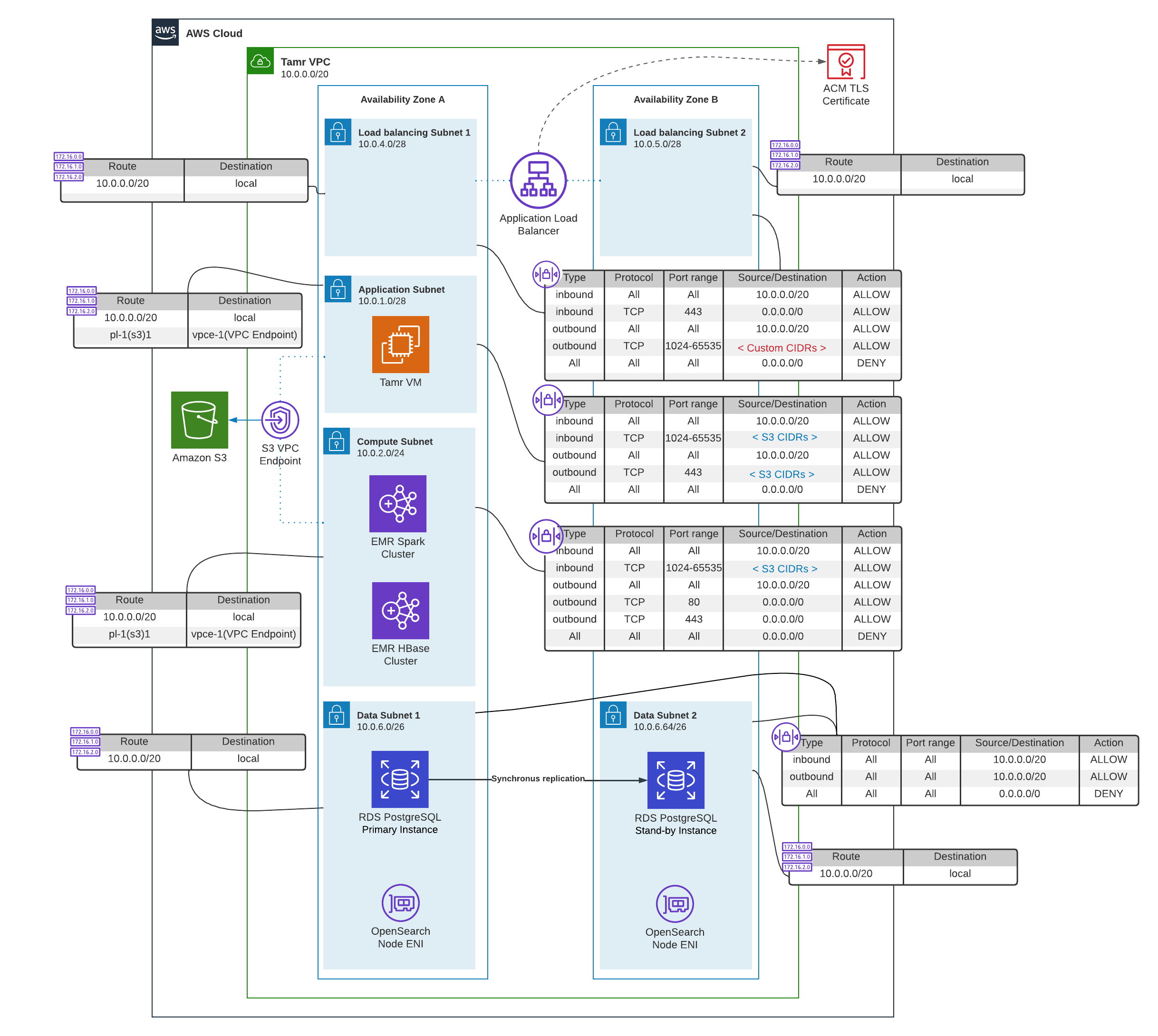

AWS Network Reference Architecture

The Tamr Core VPC spans two Availability Zones (AZs) and includes six (6) private subnets by default:

- Load balancing Subnets (2): Hosts an Application Load Balancer (ALB) to expose the Tamr Core application.

- Application subnet (1): Hosts the EC2 instance where the Tamr Core application is deployed (also known as Tamr VM).

- Compute subnet (1): Hosts the Amazon EMR clusters and is launched in the same AZ as the Application subnet.

- Data subnets (2): Used for deploying a Multi-AZ PostgreSQL Relational Database Service (RDS) instance and a Multi-AZ Amazon OpenSearch Service domain.

Each type of subnet has a dedicated custom network Access Control List (ACL) to control inbound and outbound traffic. The ACLs allow all traffic bound within the VPC (for example, 10.0.0.0/20). A gateway VPC endpoint provides a secure, reliable connection to Amazon S3 without requiring an Internet gateway or NAT device. To allow both the Tamr Core VM and the EMR clusters to access S3 through the endpoint, the ACLs for both the Application and Compute subnets allow traffic to and from S3 IP address ranges. See the AWS IP address range documentation.

The sizing of the subnet CIDR blocks in the diagram above is based on a typical Tamr Core deployment, in which Compute (EMR cluster) and Data subnets (RDS instance and OpenSearch nodes) have a significantly larger number of hosts than the Application subnet (Tamr Core VM only) and Load balancing subnets (ALB only). During deployment of your VPC, resize the CIDR scopes to meet your distinct architectural needs.

In order to work properly, the Tamr Core application must be able to resolve the Amazon ES endpoint to the appropriate private IP addresses; Domain Name System (DNS) support must be enabled for the VPC. See the AWS DNS support for VPC documentation.

For new deployments, Tamr recommends using the complete example in the Terraform AWS networking module. This example includes all the necessary networking components for a secure Tamr Core deployment, including nACLs and TLS encryption for HTTP traffic.

About Network Access Control Lists

Tamr's reference VPC architecture includes network Access Control Lists (ACLs) as an additional layer of security to control traffic, in case security groups are overly permissive. As described in the previous section, only traffic bound within the VPC is permitted by the baseline ACLs, along with HTTP/s traffic to and from Amazon S3.

The use cases described in the following sections require adding further rules to these ACLs to allow network traffic to flow in the intended ways (for example, to and from an external network).

Network ACLs are stateless; responses to allowed inbound traffic are subject to the rules for outbound traffic and vice versa. The complexity of managing ACLs increases geometrically with each additional rule. Tamr highly recommends that you implement fine-grained traffic control at the security group level to keep network ACLs as simple as possible.

Additionally, network ACLs have a soft limit of 20 rules. If this limit is exceeded, Terraform will throw a NetworkAclEntryLimitExceeded error. AWS recommends implementing fewer than 20 rules to the ACLs, and their official documentation states that performance issues may arise due to the increased workload to process the additional rules. See the AWS network ACL documentation to learn more.

Considerations for the Application Load Balancer

When setting up an Application Load Balancer (ALB) to expose the Tamr Core application, you must ensure that it can communicate with the Tamr Core VM on its service ports (for example, 9100). You must also verify that the security groups associated with the load balancer allow TCP inbound traffic on its listener ports (typically 80 and 443 for HTTP/s) from the desired sources. (See Accessing Tamr Core Resources through a Private Network.) See the AWS Application Load Balancer documentation to learn more about ALBs.

Always encrypt data in-transit when using Tamr Core. You can create an HTTPS listener for the ALB to enable SSL/TLS sessions between your load balancer and clients. To use an HTTPS listener, you must deploy at least one SSL/TLS certificate on your ALB. The recommended way to issue certificates for your load balancer is using AWS Certificate Manager (ACM). See the AWS HTTPS listener documentation to learn more about HTTPS listeners for your ALB and how to configure them.

You can mange the configurations for the ALB using the Terraform AWS networking module.

Accessing Tamr Core Resources through a Private Network

For security reasons, the Tamr Core deployment should be accessible only through a private network. To accomplish this, AWS provides several options for connecting the Tamr Core VPC with other on-premises or cloud-based networks depending on your particular requirements. You can read about the different alternatives and find the one that best suits your use case in the Amazon Virtual Private Cloud Connectivity Options Whitepaper.

Regardless of how the connection to the VPC is set up, you must ensure that your VPC network resources are configured to allow inbound connections from a private network. At minimum, users must be able to access the Tamr Core application. To achieve this:

- The Application subnet's route table must have a route with your private network's IPv4 address as the destination CIDR, setting the appropriate gateway, network interface, or connection (for example, a Virtual Private Gateway) as target.

- If your configuration includes an ALB, the load balancing subnets’ ACLs must allow traffic coming from your corporate network on the ephemeral ports (1024-65535) to port 443. If the users will be accessing the Tamr Core VM directly, the application subnet’s ACL must allow incoming and outgoing traffic to the configured unify port that defaults to 9100 and to the ephemeral range of ports.

- The Tamr Core VM's security group must allow inbound traffic to the desired ports either from your private network's CIDR or from specific security groups where applicable (for example, security groups in a peered VPC or an ALB).

In order to debug or troubleshoot performance issues, you must enable traffic to other services like EMR and OpenSearch.

EMR has several user interfaces that can be accessed directly on the cluster nodes. The ports to which you must configure access depends on which applications you deployed in your EMR cluster. See the AWS documentation for the full list of the ports and application user interfaces.

Configuring Outbound Internet Access for Tamr Core VM

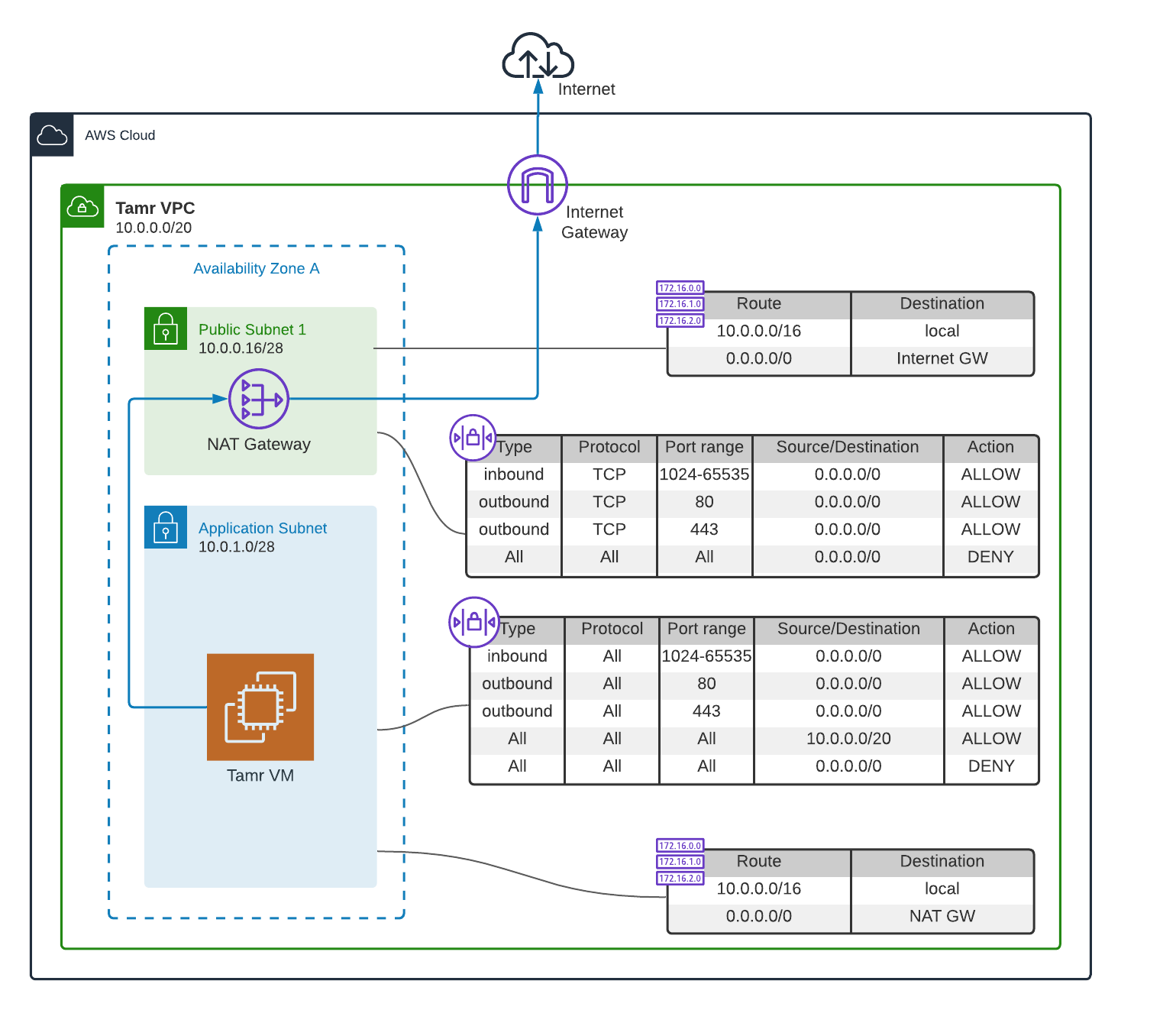

The Tamr Core VM might need to be able to initiate connections to the Internet in order to connect to external services, for example to download software and security patches for your Operating System. Tamr recommends deploying a NAT gateway, which allows the Tamr Core VM in the private Application subnet to connect to services outside the VPC, and does not allow the Tamr Core VM to receive unsolicited connection requests.

NAT Gateway setup for outbound Internet access

In this scenario, an Internet Gateway must be deployed in the Tamr Core VPC. At least one public subnet must be created in which the NAT gateway can be deployed, preferably in the same AZ as the Application subnet. The Application subnet's route table must be updated so that Internet-bound traffic (0.0.0.0/0) is sent through the NAT gateway.

Assuming all Internet-bound connections will be over HTTP/s, the network ACLs for both the public subnet and the Application subnet must allow outgoing traffic on ports 80 and 443 and incoming TCP traffic on the ephemeral ports from and to the zero-address (0.0.0.0/0), respectively.

If your license includes Tamr Enrichment Services, the EMR cluster also requires outbound Internet access. In this case, you also must make the changes described above in the compute subnet's route table and network ACLs.

To implement the changes described in this section using the Terraform module, you must configure the following variables:

create_public_subnetspublic_subnets_cidr_blocksenable_nat_gateway

Configuring the Interface VPC Endpoint for EMR

The Tamr Core VM can communicate directly to the Amazon EMR API using an interface VPC endpoint without traversing the internet. Communication between the EMR API and the VPC is conducted entirely within the AWS network. Tamr recommends this implementation for deployments that do not allow internet access. With this implementation, there is no need to set up an Internet Gateway, Nat Device/Nat Gateway, VPN connection, or AWS Direct Connect connection.

When you create the interface VPC endpoint, you must add an ingress rule to the security group to allow incoming traffic from the Tamr Core VM. No egress rules are required.

For more information about how to connect to Amazon EMR using an interface VPC endpoint, see the AWS documentation. For an example that includes the VPC endpoint, see the Tamr GitHub repository.

Updated over 2 years ago