Deploying a Scalable Tamr Core Instance on AWS

Install the Tamr Core software package on Amazon Web Services (AWS).

This topic provides an overview of Tamr Core's scalable cloud-native offering on AWS, basic network requirements and security features, and deployment prerequisites and steps.

Note: The name of the Amazon Elasticsearch Service has changed to "Amazon OpenSearch Service". See Amazon's blog post for more information about this change.

AWS Overview

In a cloud-native Tamr Core deployment, some or all major dependencies are externalized. Deploying Tamr Core on AWS makes use of billable services from the AWS stack. A complete Tamr Core deployment requires that you configure the following services:

- Amazon EMR (Elastic Map-Reduce): Hosts an HBase cluster for internal data storage and Spark clusters for on-demand computation.

- Amazon S3 (Simple Storage Service): Stores persistent data from EMR.

- Amazon RDS (Relational Database Service): Provisions a PostgreSQL database instance for application metadata storage.

- Amazon OpenSearch Service: Launches OpenSearch cluster to power the Tamr Core user interface (UI).

- Amazon EC2: Provisions a VM to host the Tamr Core application.

- Amazon CloudWatch: Collects metrics from launched AWS resources.

Note: You must deploy Tamr into a region which has all of the above services available. See AWS Services by Region for a list of AWS services by region.

Expected Duration for Provisioning and Configuring Deployments on AWS

Typically, you can provision AWS cloud resources in about 30 minutes. Additionally, plan to allocate one day for the configuration and execution of the steps described in this topic.

Requirements for Users Deploying on AWS

Users deploying Tamr Core on AWS must be skilled in Linux and Terraform.

These users must also be familiar with the following AWS services:

- EC2

- EMR

- RDS

- OpenSearch Service

- S3

- Networking (VPC, subnets, security groups)

Costs

Tamr Core runtime costs equal the cost of the deployed EC2 instance, plus EBS cost.

Optionally, you may store backups in S3, which incurs an additional cost on a per GB basis.

You may incur additional optional costs from the usage of RDS per DB Instance-hour consumed, and from CloudTrail per data event.

Tamr Core costs are per license, with additional cost for optional services and support. For more details on costs please see the Tamr AWS Marketplace product listing.

Sizing Guidelines

For single-node deployment sizing guidelines, see AWS Sizing and Limits.

The following table provides cloud-native environment size configurations for AWS. Size refers to the number of records:

- Small: 10 million - 100 million records

- Medium: 100 million - 500 million records

- Large: 500 million - 1 billion records

| Small | Medium | Large | |

|---|---|---|---|

| Tamr Core | 1 x r6a.2xlarge, 300GB | 1 x r6a.2xlarge, 300GB | 1 x r6a.2xlarge, 300GB |

| EMR HBase Master | 1 x r6g.xlarge | 1 x r6g.xlarge | 3 x r6g.xlarge |

| EMR HBase Worker | 20 x r6g.xlarge | 40 x r6g.xlarge | 80 x r6g.xlarge |

| EMR Spark Master | 1 x r6g.xlarge | 1 x r6g.xlarge | 1 x r6g.xlarge |

| EMR Spark Worker | 4 x r6g.8xlarge | 8 x r6g.8xlarge | 16 x r6g.8xlarge |

| OpenSearch | 3 x r5d.xlarge, 500GB | N/A | N/A |

See AWS documentation for all supported instance types for EMR. Do not use an AWS Graviton processor for the VM that runs Tamr Core.

Limits on AWS Services

Limits on services are set by AWS, not by Tamr. See the AWS documentation for the following limits:

Security Features

AWS services expose controls for enforcing secure practices. Tamr Core leverages the following practices:

- Enforcement of encryption at-rest on EMR clusters with server-side S3 encryption (SSE-S3) on the underlying S3 buckets used for storing data.

- Enforcement of encryption at-rest and in-transit on OpenSearch cluster nodes.

- Encryption of Tamr Core VM’s root volume device.

- Controlled in-bound network traffic to Tamr Core infrastructure. Configure security group rules based on your organization's needs to allow specific VPN or organizational IP addresses.

- Network Access Control Lists (ACL) and Security Groups to control access (internal access via a VPC network or a secure public access over HTTPS) and to specify ports for each type of connection that must be kept open. You use these ports to access the Tamr Core UI and run commands to check instance health. Tamr Core uses an AWS Application Load Balancer (ALB) to allow clients to access Tamr Core securely over HTTPS.

Note: Encryption at rest: Because a standard Tamr Core deployment on cloud infrastructure uses dedicated service instances, Tamr Core depends on service-level encryption for encryption at rest. This ensures that Tamr Core’s data is encrypted using keys that are distinct from those used by other applications, while also allowing keys to be managed using the cloud provider’s standard key management service.

For non-production environments configuring ACL, ALB, and HTTPS is strongly recommended but not required.

Important: If you do not configure ACL, ALB, and HTTPS in a non-production deployment, all users on the network will have access to the data. Use a unique password for this deployment.

See Tamr Core AWS Network Reference Architecture for more information about these security features.

Tamr recommends following the principles of least privilege when deploying on AWS. See AWS principles of least privilege documentation for further information.

Tamr encrypts the credentials for RDS in transit and at rest. Alternatively, you can choose to use the AWS Secrets Manager to maintain the RDS credentials in a central, secured location, and propagate the credential information to Tamr. See the AWS Secrets Manager documentation for instructions on creating and managing secrets.

Tamr makes use of Instance Metadata Service Version 2 (IMDSv2) so the use of IMDSv1 can be disabled without affecting the operation of Tamr Core. For more information about IMDSv2 please see Use IMDSv2.

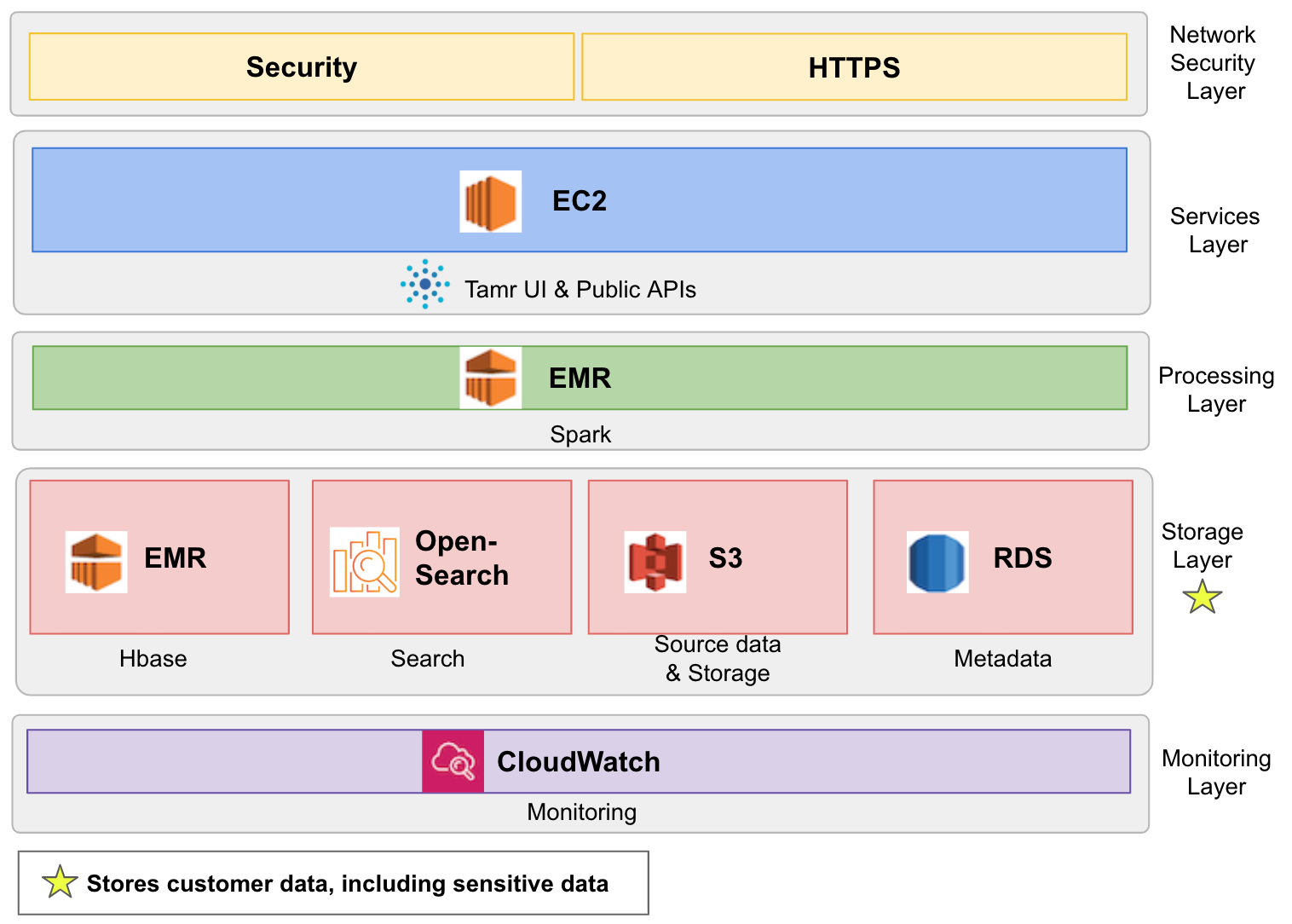

Data Flow in Cloud-Native Deployments on AWS

The data flow through the Tamr Core cloud-native deployment on AWS

This diagram shows the following components.

- Security : ACL are used to control access (internal access via a VPC network or a secure public access over HTTPS) and specify ports for each type of connection that must be kept open. See Network Prerequisites.

Note: None of Tamr's deployed resources are required to be configured for public access for normal operation. Tamr recommends that these resources should not be made available for public access. - Tamr UI & Public API's: The Tamr Core application is deployed on a single EC2 instance. A number of internal microservices and external service dependencies run on the instance as well. Users sign in to Tamr Core using LDAP or SAML authorization.

- Data Processing - Spark: To run Spark jobs, Tamr Core uses Amazon EMR which is a managed cluster platform that simplifies running big data frameworks.

- Storage: The storage layer stores customer data, including sensitive data. This layer includes the following:

- Internal Database HBase: Tamr Core launches an HBase cluster using Amazon EMR. HBase works by sharing its filesystem and serving as a direct input and output to the EMR framework and execution engine.

- Amazon S3: Amazon S3 is used as a shared filesystem between Tamr Core and Spark to store data including HBase StoreFiles (HFiles) and table metadata, .jar files, Spark logs, and so on.

- Source Data: The input dataset(s) can be uploaded from the local filesystem or from a connected external source. See Uploading a Dataset for more information about uploading source data to Tamr Core.

- Search: Tamr Core uses Amazon OpenSearch to host a cluster that is used as a search engine and powers the Tamr UI. Customer data may be stored here, including sensitive data.

- Metadata: Tamr Core uses Amazon RDS to host a PostgreSQL database instance that stores application metadata.

- Monitoring and Metrics - Amazon CloudWatch: Enable CloudWatch on launched AWS resources to monitor both real-time and historical metrics. These metrics provide insight into the performance of services in the Tamr Core stack. See also Logging in Cloud Platform Deployments.

How is the Deployment Orchestrated?

Tamr orchestrates cloud-native deployment on AWS using both Terraform and manual installation.

Deploy Hardware with Terraform

Terraform by Hashicorp is an infrastructure-as-code tool to manage infrastructure. Hashicorp maintains an AWS provider that allows management of AWS resources using Terraform.

Tamr maintains a set of Terraform modules for deploying the AWS infrastructure needed by Tamr Core, including a module that creates a configuration file for the Tamr Core software.

Manually Install Tamr Core Software

To start the Tamr Core application, copy the Tamr Core software and generated Tamr Core configuration file to the Tamr Core EC2 instance. Run the startup scripts bundled in the Tamr software package. See the Tamr Core Installation Process, below, for detailed instructions.

Tamr Terraform Modules Reference

Tamr maintains Terraform modules to provision and manage the infrastructure for a cloud-native environment in AWS.

Each module provides suggested patterns of use in the /examples directory in addition to a minimal in-line example specified in each module’s README file. Additionally, the README file also provides an overview of what the module will provision as well as the module’s input and output values.

The following Terraform modules in GitHub are used to deploy an AWS cloud-native Tamr Core environment.

| Module | Description |

|---|---|

| AWS Networking | Deploys network reference architecture, following security best practices. |

| Tamr VM | Provisions Amazon EC2 instance for Tamr Core VM. |

| EMR (HBase, Spark) | Deploys either (1) a static HBase and/or Spark cluster on Amazon EMR or (2) supporting infrastructure for an ephemeral Spark cluster. |

| OpenSearch (formerly "Elasticsearch") | Deploys an OpenSearch cluster on Amazon OpenSearch Service. |

| RDS Postgres Database | Deploys a DB instance running PostgreSQL on Amazon RDS. |

| S3 | Deploys S3 buckets and bucket access policies. |

| Tamr Configuration | Populates Tamr Core configuration variables with values needed to set up Tamr Core software on an EC2 instance. |

Deployment Prerequisites

Terraform Prerequisites

Tamr maintains a set of Terraform modules that declare the AWS resources needed for a Tamr Core deployment. To apply Tamr’s AWS modules, verify that the following prerequisites are met.

Install the Terraform Binary

Refer to the README file for each Terraform module, included with the module source files in the repository, for the supported Terraform version and AWS Provider plugin package version. Note that the AWS Provider is frequently upgraded. For information on new releases, see the releases page for terraform-provider-aws.

Configure Terraform’s AWS Provider with Proper Credentials

Before using the Tamr Terraform modules, you must ensure that the IAM user or role with which Terraform commands are executed has the appropriate permissions. You also must configure the AWS provider with the necessary credentials. See Terraform IAM Principal Permissions for AWS for detailed policies that provide with necessary permissions and credentials.

Note: Learn more about AWS provider permissions in the Terraform documentation.

Networking Prerequisites

Tamr’s AWS deployment modules are based on the assumption that resources will be deployed into an existing VPC setup. See Tamr Core AWS Network Reference Architecture for network architecture requirements and details.

Network Access Control Lists and HTTPS Prerequisites

- Secure external access to Tamr Core via HTTPS by deploying an ALB with a TLS certificate and configuring the Tamr Core instance as a backend for that ALB. See a complete example.

- Configure the AWS Network ACLs. See network ACLS in the AWS documentation for instructions. Network ACL configuration requirements:

- Allow only internal access to Tamr Core default port

9100(via TCP). - Open port

443for HTTPS, with a restrictive IP range that you specify using IPv4 addresses in CIDR notation, such as1.2.3.4/32.

Note: If you plan to forward HTTP traffic to HTTPS, also open port80.

- Allow only internal access to Tamr Core default port

Additional Prerequisites

- Obtain a license key and Tamr Core software package by contacting Tamr Support at help@tamr.com. You need to provide the license key when accessing the Tamr Core instance via a browser.

- (Optional) Prepare a small CSV dataset to profile. You can use this dataset to run a small profiling job you install Tamr Core to check the health and readiness of your deployment’s EMR clusters.

Installation Process

Step 1: Configure the Terraform Modules

Each module’s source files in the repository include:

- A

READMEfile that describes how to use the module.

Note: Tamr also provides aREADMEfile for each nested submodule. - An example with a suggested pattern of use is provided in each module repository’s

/examplesfolder.

Invoke each module, filling in the required parameters and any other optional parameters you require for your deployment. In some situations, it may be appropriate to invoke the nested submodules if the root module is too prescriptive for your desired deployment setup.

Tamr recommends that you add values for the Tamr configuration Terraform module last, as many input variables depend on values that are output from the other Tamr AWS Terraform modules.

For reference, the Tamr configuration Terraform module has an example of a full AWS cloud-native deployment with example invocations of all Tamr AWS Terraform modules. Contact Tamr Support at help@tamr.com for access to the configuration module repository.

Note: The Tamr Terraform modules follow their own release cycles. To use a newer version, update the version in the ref query parameter of the source variable in the module invocation:

module "example_source_reference" {

source = "git@github.com:Datatamer/<repository name>.git?ref=0.1.0"

}

To help Tamr understand how this deployment mechanism is used, apply the following environment variable before running Terraform scripts:

$ export TF_APPEND_USER_AGENT="APN/1.0 tamr/1.0 cloud-native/1.0"

Step 2: Apply the Terraform Modules

Important: Complete the Terraform Prerequisites before applying the Terraform modules.

To apply the Terraform modules:

- Initialize the provider and other dependencies.

terraform init - Check what changes (creations, updates, deletions) will be introduced.

terraform plan - Apply the changes from the plan. This step can take 20-45 minutes to execute.

terraform apply

Step 3: Install the Tamr Software Package

Step 3.1: Prepare EC2 Instance for Tamr Software Installation

To ensure that Tamr is installed and runs properly:

- Set ulimit resource limits. For more detailed directions for setting these values, see Setting ulimit Limits.

- Install the appropriate PostgreSQL 12 clients (

pgdumpandpgrestore) for your EC2 instance’s operating system.

Step 3.2: Unzip the Software Package

SSH into the Tamr Core EC2 instance and unzip the Tamr Core Software Package (unify.zip) into the home directory designated for your Tamr Core application.

Step 3.3: Start ZooKeeper

To start ZooKeeper, run:

<home-directory>/tamr/start-zk.sh

Step 3.4: Mount the Tamr Configuration File

Take the output of the terraform-aws-tamr-config module (or manually populate a Tamr Core configuration file) and create a YAML configuration file on the Tamr Core EC2 instance.

To set the configuration, run:

`<home-directory>/tamr/utils/unify-admin.sh config:set --file /path/to/<Tamr-configuration-filename>.yml`

Step 3.4: Start Tamr Core and Its Dependencies

To start Tamr Core's dependencies and the Tamr Core application, run:

<home-directory>/tamr/start_dependencies.sh

<home-directory>/tamr/start-unify.sh

Verify Deployment

To verify that your deployment’s EMR clusters are functioning properly:

- Navigate to

http://<tamr-ec2-private-ip>:9100in a browser and sign in using your credentials obtained from Tamr Support. - Upload a small CSV dataset, and profile it.

Tip: If the Tamr Core UI is not accessible at the mentioned address, check thetamr/logs/directory on the EC2 instance.

To verify that your deployment’s OpenSearch cluster is functioning properly:

- Create a schema mapping project and add a small CSV dataset to the project.

- Bootstrap some attributes, and then run Update Unified Dataset.

Verify that you now see records on the Unified Dataset page.

For more detailed instructions, see Tamr Core Installation Verification Steps.

Configuring Core Connect

To move data files from cloud storage into Tamr Core, and exported datasets from Tamr Core to cloud storage, you use the Core Connect service. See Configuring Core Connect.

Additional Resources

See the Tamr Core Help Center for information on maintaining availability in AWS cloud native deployments.

Updated 20 days ago