Tamr LLM Query Guidance

Overview

This article serves to provide guidance on using LLM and its associated query abilities to achieve different business outcomes. We will walk through a few different scenarios and provide examples for each of them. The guiding theme in executing these examples is to remember that LLM is essentially real-time processing of the entire Tamr mastering model workflow. Specifically, this includes:

For ‘records’ matching:

- Applying the blocking model for identifying record pairs

- Applying the Tamr ML model for classifying whether or not the record pairs are matches

For ‘clusters’ matching:

- Applying the blocking model for identifying record pairs

- Identifying to which clusters those paired records belong

Please note that for both record and cluster matching the incoming record must have pairs which pass the blocking model. This means that the desired outcomes for LLM results should be representative of your general desired outcomes for clustering.

Examples

The following examples show ways to ensure that the blocking model is set up correctly for LLM. Often the answer will be to think through the tokenizer used in the blocking model. For full documentation on the various tokenizers please see the dedicated section on Tokenizers and Similarity Functions in our public docs.

Matching based on partial company names

When matching based on names it is often useful to apply the non-default tokenizer for your name field in the blocking model. As a simple example, “Will Jones” and “William J.” are 0% similar when using the default tokenizer because the default tokenizer relies on whole words to calculate similarity. If instead a bigram or trigram tokenizer is used, the similarity would be non-zero because these tokenizers consider word fragments.

In the example below we will be trying to return LLM results based on a partial match of a company name.

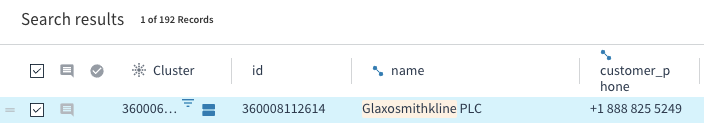

In Tamr there is a cluster for Glaxosmithkline (GSK) which contains records like this:

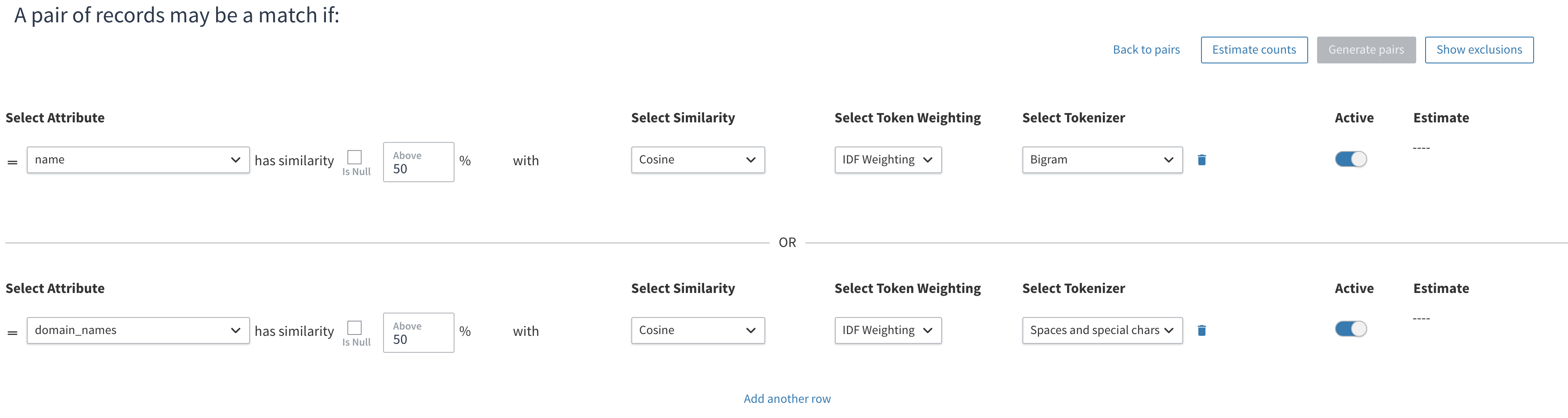

In order to return results for a partial spelling (or misspelling) of the name we have the following (very loose) blocking model which will return record pairs if at least 50% similarity partial match on name:

Note in particular we are using the bigram tokenizer in order to allow for mis- and partial spellings. With this configuration LLM returns the following results based on various incoming records:

| Type | Incoming Record | Top LLM Result (truncated) |

| Partial spelling | {"recordId": "123", "record": {"name": ["glax"]}} | No match found |

| Partial spelling | {"recordId": "123", "record": {"name": ["glaxosmith"]}} | { "matchedRecordId": "8848921401124397288",

"suggestedLabel": "MATCH", "suggestedLabelConfidence": 0.029, "matchProbability": 0.6, "attributeSimilarities": { "name": 0.758 } } |

| Misspelling | {"recordId": "123", "record": {"name": ["glaxosmtihklnie"]}} | {

"matchedRecordId": "8848921401124397288", "suggestedLabel": "MATCH", "suggestedLabelConfidence": 0.029 "matchProbability": 0.6, "attributeSimilarities": { "name": 0.680 } } |

Looking across the results we can see that we still get matches even though we are only sending in one field, and only partial, or incorrect, data into that field. However we do need to supply enough of the name to cross the 50% similar threshold. We can get a sense of what sort of threshold we would need to set to get the desired outcomes based on the above. For example, setting a threshold of 75% in our blocking model would still allow the partial match on just ‘glaxosmith’ but would result in the other two examples not returning anything.

In a real deployment, it will probably not be possible to have such a loose threshold (50%) as in the example due to impacts on model performance. Also note that if we had used the default tokenizer in our blocking model none of the above cases would return a match. If this seems concerning, please recall that we are attempting a full match on a customer based only on a partial piece of the name alone. It is entirely reasonable to expect to need to enter more information than just a partial name in order to find a high quality match (this would be akin to asking a shipping website to find your address for you based only on a partial spelling of your street name). Again, do not distort the model for LLM, but rather do keep LLM in mind when creating the model.

Matching based on customer phone number

Let’s investigate what happens if we try to match based only on phone number (recall the phone number for our record is +1 888 825 5249)

| Type | Incoming Record | Top LLM Result (Truncated) |

| No country code | {"recordId": "123", "record": {"customer_phone": ["888 825 5249"]}} | No match found |

| Different format | {"recordId": "123", "record": {"customer_phone": ["1-888-825-5249"]}} | No match found |

At this point you may scratch your head wondering why we are not getting any matches. The answer is that we haven’t given Tamr any signal that would allow this record to pass the blocking model in our project. Since the blocking model does not take into account the phone number at all, sending in only phone number information will preclude Tamr from being able to make pairs. The lesson is: for LLM to be successful you must send in information for all attributes in at least one clause used in the blocking model.

Matching based on customer name and phone number

Let’s now see what happens when we use both customer name and phone number in conjunction.

| Type | Incoming Record | Top LLM Result (Truncated) |

| Partial name but full phone | {"recordId": "123", "record": {"name": ["glax"],"customer_phone": ["+1 888 825 5249"]}} | No match found |

| Name misspelling and missing country code | {"recordId": "123", "record": {"name": ["glaxosmtihklnie"],"customer_phone": ["888 825 5249"]}} | {

"matchedRecordId": "8848921401124397288", "suggestedLabel": "MATCH", "suggestedLabelConfidence": 0.342, "matchProbability": 0.8, "attributeSimilarities": { "customer_phone": 0.962, "name": 0.680 } } |

| Partial name and different format with missing area code | {"recordId": "123", "record": {"name": ["glaxo"],"customer_phone": [“825-5249"]}} | {

"matchedRecordId": "8848921401124397288", "suggestedLabel": "MATCH" "suggestedLabelConfidence": 0.270, "matchProbability": 0.6, "attributeSimilarities": { "customer_phone": 0.786, "name": 0.758 } } |

Comparing these results to the table in the first example we can see that the only meaningful change is that Tamr’s confidence has increased. Notably, the first example still does not return any matches. This is because we have not increased the similarity from the blocking model’s point of view (it does not consider phone number), but only for the predictions from Tamr’s ML model.

Summary

When considering using Tamr’s LLM capabilities it is important to keep in mind that in order to get any matches, the data you send in will need to contain information relevant to the blocking models used in your project. If the incoming record does not contain enough information to pass the blocking model then no pairs will be made, regardless of what is sent in. Information sent in beyond what is needed to pass the blocking model will adjust the content and confidence of Tamr’s predictions.

Please also note that the examples above are just illustrative, to show the flexibility and thought that needs to go into designing the blocking model when it comes to LLM. Your data will have different needs.

Updated over 2 years ago