Training Tamr Core to Categorize Records

To provide initial training, you find a record for each of the distinct, unique categories in your taxonomy.

Tamr Core machine learning models rely on expert guidance to detect similarities and differences. After a curator prepares your project's unified dataset and taxonomy, you select a small, representative sample of the dataset and "label" them with the correct node of the taxonomy.

The model uses this sample to learn about your data and then attempts to find similarities in other records that might indicate that they belong in the same category. As a result, the quality of the categorized sample that you provide to Tamr Core contributes more to the success of the model than the quantity of records you label. See Working with Tamr Core Machine Learning Models.

Initial Categorization Training Example

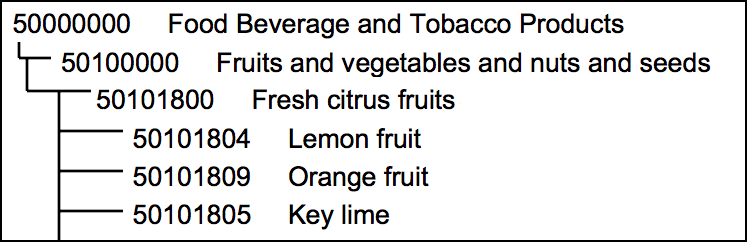

A company uses the United Nations Standard Products and Services Code (UNSPSC) as the taxonomy for categorizing products so that they can do spend analysis. The UNSPSC is a four-tier taxonomy. The following image shows one branch of the taxonomy, starting from the broadest categorization and getting more specific tier by tier.

A brief excerpt of the UNSPSC taxonomy.

Focusing just on this fragment of the taxonomy, your goal is to find records that you are sure represent each of the leaf nodes on the bottom tier, and, if possible, records that fit nodes on the other tiers. As a general rule, your sample should include three records for each leaf node and one to three records for each of the other nodes.

Guidelines for Initial Categorization Training

As you find the records for your representative sample, keep the following guidelines in mind:

- Distribute labels evenly across all terminal nodes: if you label records for "Lemon fruit" and "Orange fruit", find and label some "Key lime" records too.

- Distribution of labels represent the distribution of data: if your business doesn't handle key limes, you won't have any records to label as "Key lime". However, if you do not provide an example of a key lime record, the model does not learn to suggest "Key lime" for any other records.

- Be as specific as you can: if the record is for grapefruit, don't label it as "Fresh citrus fruit" unless there is no leaf node for "Grapefruit."

- Go beyond the description: Tamr Core uses data for every attribute that your curator has included in machine learning when trying to discover commonalities and generate suggestions for other records. Maybe a record with a description of "lemons" has another value that indicates that this was for lemon-shaped plastic bottles of lemon juice, not fruit. Examine the value for every attribute that has include in machine learning

next to its name to be sure you find the most accurate category. See Configuring Inclusion of Attributes in Machine Learning.

next to its name to be sure you find the most accurate category. See Configuring Inclusion of Attributes in Machine Learning. - Choose the most accurate label: if you can't tell exactly what type of food a record reflects, but you're sure that it is a food, use the data that is available to label it "Food Beverage and Tobacco Products" or "Fruits and vegetables and nuts and seeds."

- Avoid labeling every record that you are sure of: your objective for this task is to provide a broad sample so that Tamr Core can do categorization at scale for you, not for you to do all of the record labeling yourself.

See Adding Categories to Records.

Categorization After Initial Training

After you complete initial training, the project workflow continues with these iterative tasks:

- Curators apply feedback and update results. See Updating Categorization Results.

- The model uses your sample to compare values that now have associated categories to values in unlabeled records and suggests categories for those records.

- Verifiers assign records with suggested categories to reviewers and other experts. See Assigning Records in Categorization Projects.

- Reviewers upvote and downvote suggestions, provide new categorizations, and add comments to provide more feedback and help Tamr Core get increasingly more accurate. See Categorizing Records.

As your team learns more about the data and how the model reacts to feedback, curators may make adjustments to the unified dataset, such as which attributes to include in machine learning.

Adding Categories to Records

After a curator completes schema mapping by updating the unified dataset, every record on the categorized records page has an add categorization link in the Categorization column.

Train Tamr Core by supplying categories:

- Navigate to the categorized records page by selecting the option to the right of Categories. The name of this page is project-specific and reflects what it is you are categorizing.

- Locate the records that are in your representative sample. Use

Configure Table at the bottom of the page to configure column visibility, or use column sorting and search as needed.

Configure Table at the bottom of the page to configure column visibility, or use column sorting and search as needed. - To supply the category for one record, select add categorization.

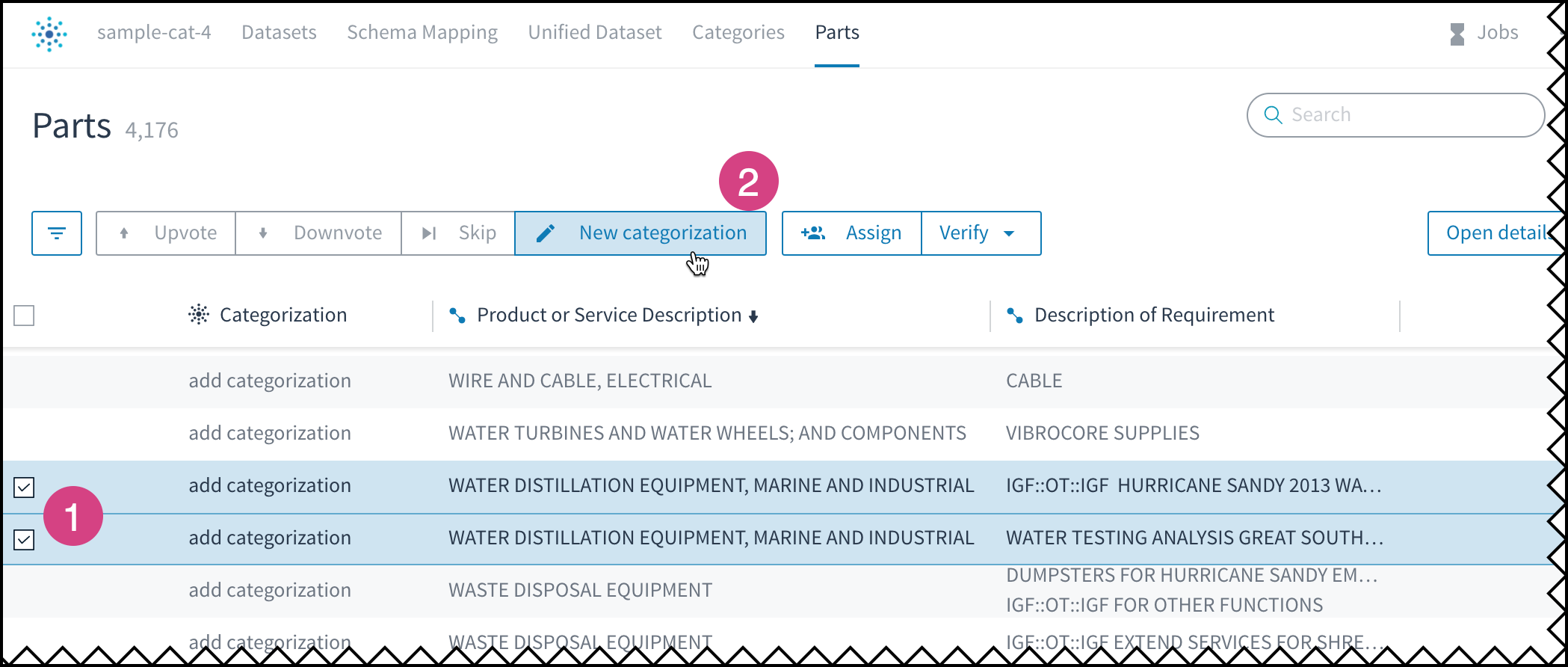

To supply the same category for multiple records, move your cursor over the left edge and use the checkboxes that appear to select the records, then choose New categorization.

Choose add categorization for a single record, or select 1) multiple records then 2) New categorization.

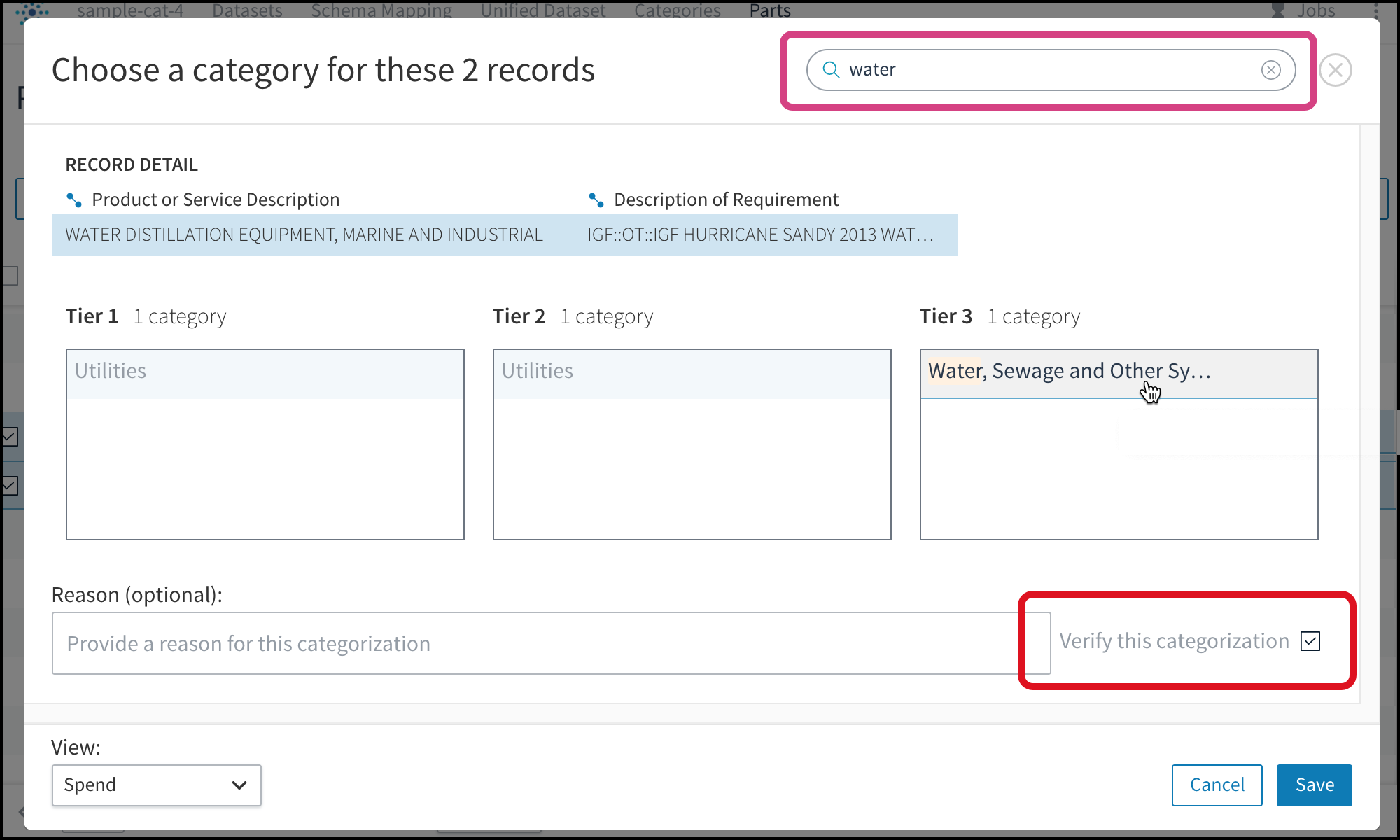

- In the Choose a category dialog box, browse through the tiers to find the categorization node you want to apply.

Note: You can also search the taxonomy for a string.

Searching for a string reduces the categories shown to only those that match; initial categories should be verified.

- (Optional) Provide a comment in the Reason field.

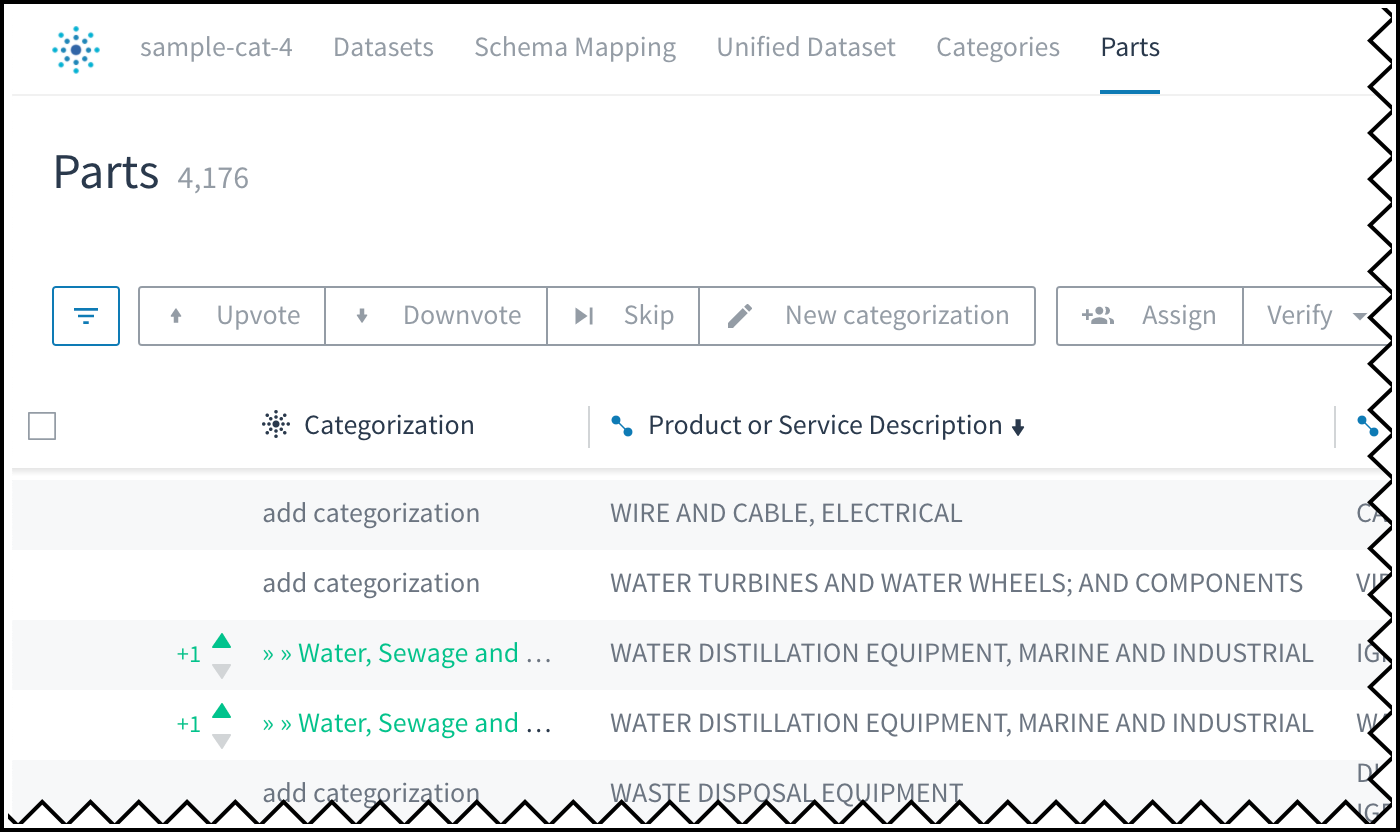

Note: By default, your categorization is marked as verified by the Verify this categorization checkbox. During initial training, you should be sure of every category that you choose for the records in your sample. As a result, you are unlikely to need to clear this checkbox. - After you Save, the categorized records page shows the assigned category in the Categorization column. Green font indicates that the category is verified, while the "+1" and up arrow indicators reflect the expert input you just provided.

Records with a category verified by an expert.

Updated over 2 years ago