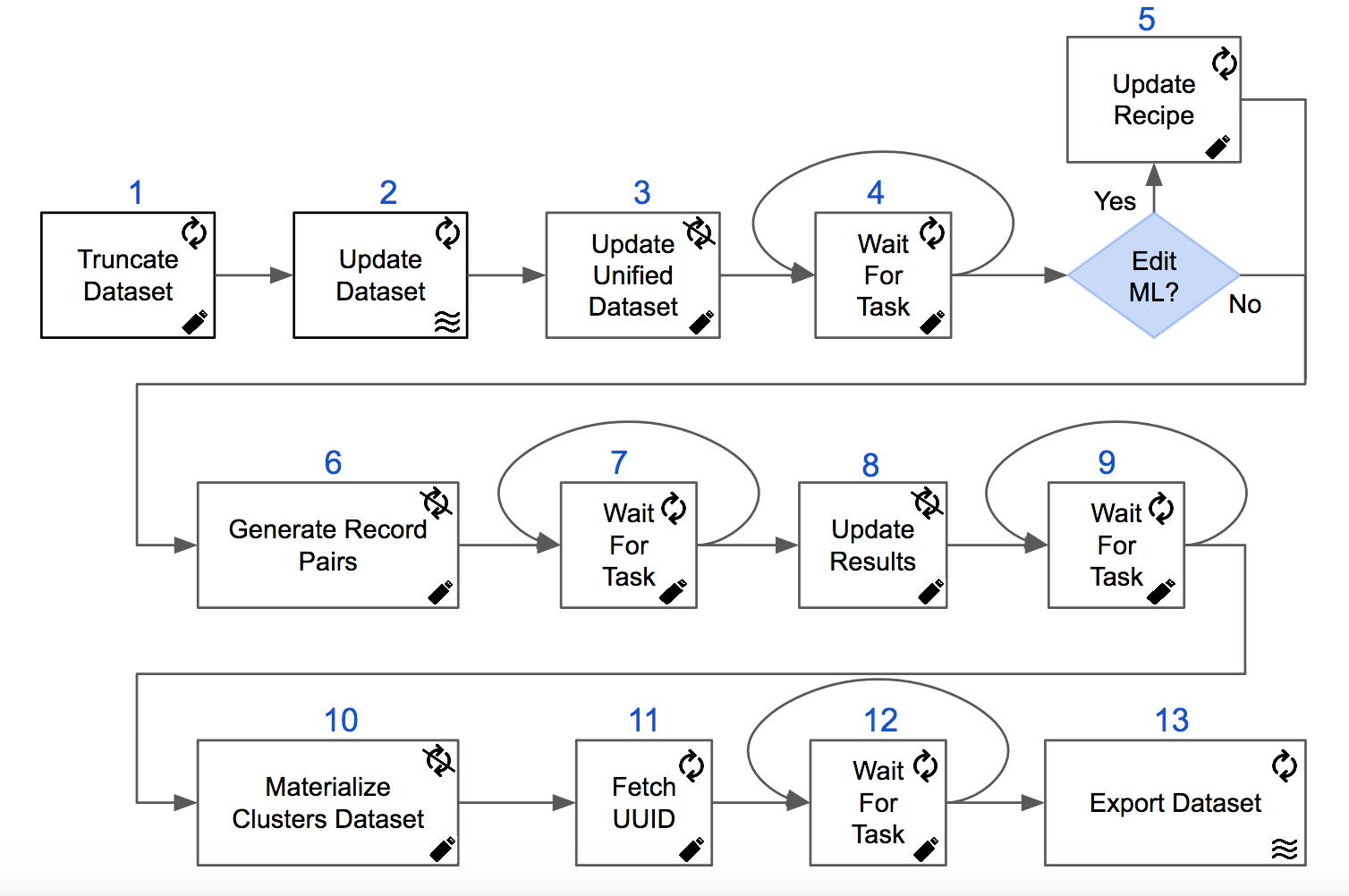

Batch Operation of a Mastering Project

Run Tamr in batch mode from dataset truncate through mastering and exporting.

API calls to play a mastering project in batch operation (truncate and load mode).

Batch Operation

- Truncate Dataset: POST /dataset/datasets/{name}/truncate

Truncate the records of the dataset{name}. This removes all existing records from the dataset. - Update Dataset: POST /dataset/datasets/{name}/update

Update the records of the dataset{name}using the commandCREATE. Because of Step 1. all records in this step are effectively inserted (i.e. no updates occur). - Update Unified Dataset: POST /recipe/recipes/{recipeId}/run/records

Update the unified dataset using its{recipeId}and the operation keywordrecords. Additionally, capture theuuidof the submitted task from the response. - Wait For Task: GET /dataset/tasks/uuid/{uuid}

Poll the storage status of the task submitted in Step 3 using the captureduuiduntilstorageStatus=COMMITTEDreceived.

If the machine learning (ML) configuration has been edited (Toggle Inclusion in Machine Learning), then proceed to Step 5 else skip to Step 6.

5. Update Recipe: POST /recipe/recipes/{id}/populate

6. Generate Record Pairs: POST /recipe/recipes/{recipeId}/run/pairs

Generate record pairs using the pairs {recipeId} and the operation keyword pairs. Additionally, capture the id of the submitted job from the response.

7. Wait For Task: GET /dataset/tasks/uuid/{uuid}

Poll the storage status of the task submitted in Step 6 using the captured uuid until storageStatus=COMMITTED received.

8. Update Results: POST /recipe/recipes/{recipeId}/run/trainPredictCluster

Apply the entity resolution model using its {recipeId} and the operation keyword trainPredictCluster. Additionally, capture the id of the submitted job from the response.

9. Wait For Task: GET /dataset/tasks/uuid/{uuid}

Poll the storage status of the task submitted in Step 8 using the captured uuid until storageStatus=COMMITTED received.

10. Materialize Clusters Dataset: POST /export

Materialize the clusters dataset {datasetId} to a specified export configuration and data format and capture the id of the submitted job from the response.

11. Fetch UUID: GET /job/jobs/{id}

Using the captured id in Step 10, fetch the job details and capture the uuid.

12. Wait For Task: GET /dataset/tasks/uuid/{uuid}

Using the captured uuid from Step 11, poll the storage status of the task until storageStatus=COMMITTED received.

13. Export Dataset: GET /export/read/dataset/{datasetId}

Export the materialized categorized dataset {datasetId}.

Updated over 5 years ago